According to a report, in the year 2019, IoT (internet of things) devices became significant targets for malware attacks. Another report suggests that 100% of the web apps it tested contained at least one security vulnerability, with 85% being risks to users.

It is clear from these stats that data or information security is a considerable risk in any organization. To safeguard your web application, it is important to follow the security practices that are described in this blog.

Top 8 Security Practices for Web Apps

- Preventing Denial-of-service attacks(DoS)

A denial-of-service (DoS) attack is a type of cyber attack in which a malicious actor aims to render a computer or other device unavailable to its intended users by interrupting the device's normal functioning. DoS attacks typically function by overwhelming or flooding a targeted machine with requests until normal traffic is unable to be processed.-1-2.png?width=739&name=Image%20Slide_S1%20(2)-1-2.png)

One simple way to prevent DoS attacks is by limiting the Http calls from a particular machine or IP-address. In our system, we can have a mechanism like a rate-limiter which can take care of this. Here our system will cache the Http calls for each machine or IP address for a particular time frame. Examples - rate-limiter for ExpressJs, AWS Shield, WAF or you can create your own. - If it’s file upload, don’t just rely on MIME-TYPE or file extension

As an attacker, the primary point of intervention to our system is mostly via a file upload. This may sound strange but it is true. We, as a developer, often ignore this part but we should know that this may harm or can compromise our system. So, we should use various methods to check the file type. Never rely blindly on file extension, this is the most trivial part to spoof.

Regardless of the risk to the system, one must always check magic numbers (magic bits) along with checking the extension and mime-type. Also, make sure the uploaded file gets moved to a secure directory without executable permissions and gets assigned a random filename. Otherwise, script.exe.png will be accepted as an image by the system and can harm at any level.

Any wrong or unnecessary executable permission can lead to a disaster in the system if we just rely on checking file extension only. One can easily upload a suspicious executable and will be able to execute it in our environment that will lead to loss at any level.

Magic Number means parsing the file header structures. You could do that as an additional step if the risk is high, i.e., your threat model involves a step where attackers upload a malicious file that leads to some weakness. It clearly involves additional work on your part (consuming CPU cycles, thus increasing cost) that you should do only when the benefit is "worth it" (but I always recommend as being a best practice).Javascript code-

const buffer = req.file.buffer;const magicNumber = buffer.toString(‘hex’,0,4);

Now check this magic number with the corresponding file type.

const MAGIC_NUMBERS = {jpg: "ffd8ffe0",

jpg1: "ffd8ffe1",

png: "89504e47",

gif: "47494638",

obj: "4C01",

pdf: '25504446',

zip: '504B0304’

};

In macOS or Linux system you can check this magic number(magic bits) of any file by this command -

xxd <file-path> | head - Https over Http

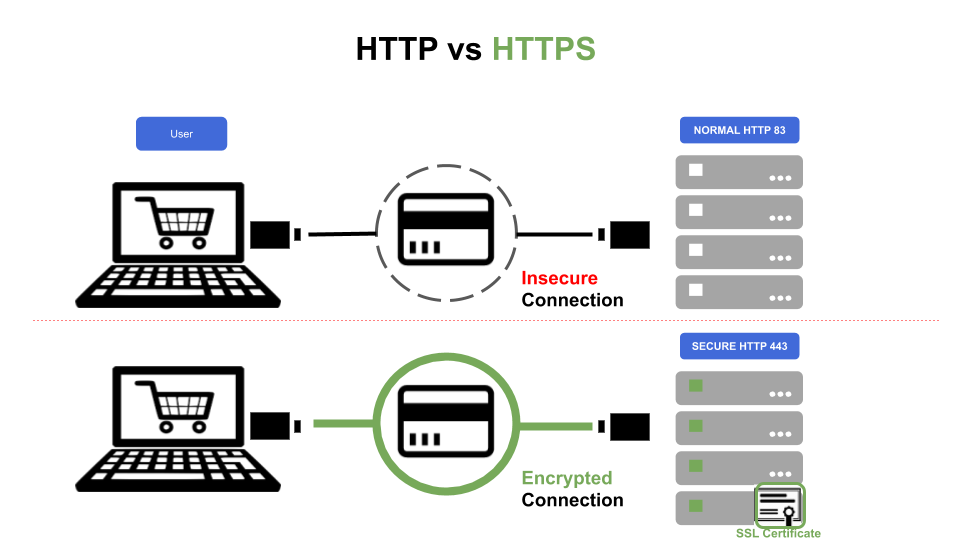

HTTPS is simply (or not so simply) Hypertext Transfer Protocol Secure. This means that you are not transferring plain text files by using Secure Sockets Layer (SSL), a cryptographic system that encrypts data with two keys. The server's public key (that encrypts) and a private key (that decrypts).

Role of SSL in securing information over the network

Secure Sockets Layer (SSL) is a standard security technology (security protocol to be more precise) for establishing an encrypted link between a server and a client—typically a web server (website) and a browser or a mail server and a mail client.

SSL allows sensitive information such as credit card numbers, social security numbers, and login credentials to be transmitted securely. Normally, data sent between browsers and web servers is sent in plain text—leaving your information vulnerable. If an attacker is able to intercept all data being sent between a browser and a web server, they can see and use that information. All browsers come with a mechanism to interact with secured web servers using SSL.

If SSL is competent enough then why cater to TLS ?

Transport Layer Security (TLS) is a widely used and adopted security protocol designed to ensure privacy and data security for communications over the network. The primary use of TLS is to encrypt the communication between web applications and servers. TLS can also be used to encrypt other communications such as email, messaging, and voice over IP (VOIP).

TLS has evolved from the previous protocol SSL, TLS had come into development as

version 3.1 of SSL. TLS and SSL are sometimes used interchangeably. We should always keep all our sensitive information in the body even though it can be kept in URL also, but if you are doing so that is a mistake as URLs are always public and can be seen by the hackers. Also URLs are stored in web server logs - typically the the whole URL of each request is stored in a server log. This means that any sensitive data in the URL (e.g. a password) is being saved in clear text - Request sanitization at the Client as well as server-side

Web apps with server-side interaction, a mixture of the two, is a good way to ensure speed and security is applied to your site to benefit yourself as well as the end-users.

Using client-side validations, you can quickly submit a clean form of input in contrast with what your database allows as input. For example, you can make sure the count of characters that your database field will allow for a certain field is being sent without having to actually make the call to the server and then having the server return just to tell you that it is not an acceptable input. So, client-side validation is great for cutting down on the number of times the server must be called. However, if the user has Javascript turned off or making a curl request then the check has to be done on the server-side.

I would state that serverside validations are most important because they can keep the unwanted data from entering your domain. There are always ways around Javascript validations and harmful entries could happen. There are people out there who want to harm your site. Server-side validation is the last step to verify the data before it enters the point of no return. Make sure everything is sanitized at both client and server. Also, make sure that you have set a request payload max size limit so that unnecessary payload can be ignored and load on the server can be reduced. This can be achieved at either Web Server(Nginx or Apache), the Application Server level, or even at the infrastructure level. - Use of JWT and its transmission over the network JSON Web Token (JWT) is an open standard (RFC 7519) that defines a compact and self-contained way for securely transmitting information between parties as a JSON object. This information can be verified and trusted because it is digitally signed. JWTs can be signed using a secret or a public/private key pair using RSA or ECDSA.

Although JWTs can be encrypted to also provide secrecy between parties, we will focus on signed tokens. Signed tokens can verify the integrity of the claims contained within it, while encrypted tokens hide those claims from other parties. When tokens are signed using public/private key pairs, the signature also certifies that only the party holding the private key is the one that signed it. This is how and why we are using JWT in our system for authorization.

JWT’s can also be used for information exchange between two parties which I would not recommend unless encrypted/signed with DES or RSA encryption. Otherwise one can easily decode your data that the token holds.

Also, we should never keep any confidential information in the JWT tokens. Also the payload should be as small as possible otherwise large payload may take time to get decoded.

Always set appropriate expiry for your tokens and use a complex and unique secret for signing your tokens. Also we should not forget the use of salt. - Checksum: Ensure integrity of data over the network

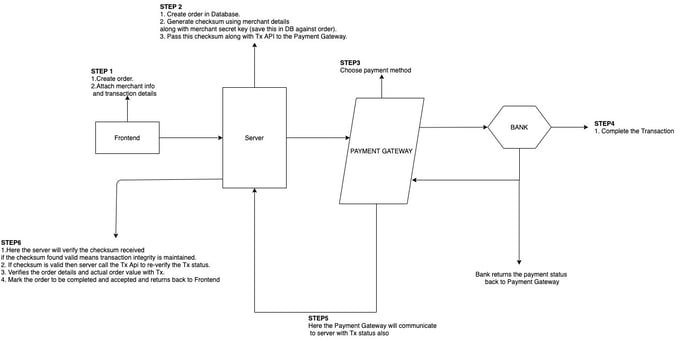

The checksum is a simple error detection mechanism to determine the integrity of the data transmitted over a network. Communication protocols like TCP/IP/UDP implement this scheme in order to determine whether the received data is corrupted over the network.

Whenever some data or media is being transferred from point A to point B we must be sure that the data or the media is not tempered or its integrity is not lost over the network. This is because an attacker can modify or tamper the data over a network and we must be sure that nothing is tampered and the data is intact before proceeding further. Which is why we use checksum for this purpose of giving a surety that data integrity is maintained from point A to point B. For real-life example ‘ccfb91146cba92a3ec5274fee90d2cee35cfda7fd38240b3f65da26d53d28a0b’ this is a VLC player executable checksum for its latest build (3.0.10), so when you download it and want to make sure that this is an authentic VLC executable, before installing you just need to get the checksum of your executable and match with the one provided by the VLC.

To create a checksum you can run this command-

shasum -a 256 <file-path>

// here the flag -a is for algorithms.

Below is the diagram with an example of an E-Commerce order system with the need of checksum for transaction integrity and validity from any user.

- Security vulnerabilities and Auditing

Developers rely on packages to solve problems and save time like any utility, database adapter, or any providers. These are the dependencies on our project, so they are being installed before using. We must validate and check for the packages we are depending upon to ensure no instances of harm to our system in any way.

Before relying on a package or using directly, it is a good practice to go through the packages itself. Analyse its popularity, documentation, and possible vulnerabilities. Some packages can have dependencies on other packages even those packages can have security issues, so precautions are needed.

A security audit is an assessment of package dependencies for security vulnerabilities. Security audits help you protect your package’s users by enabling you to find and fix known vulnerabilities in dependencies that could cause data loss, service outages, unauthorized access to sensitive information, or other issues. Without security auditing, the package code itself can harm and compromise your information and database. Example: snyk, npm audit. - API Security

APIs are generally used to access the server or some resources, so we must categorise them according to their purposes like Public, Open, and Internal(private).

Open APIs are those which are open to everyone. Whosoever can use these as per their need, regardless of any authentication required, as these APIs are meant to be public for anyone, they do not require any authentication. These APIs usually serve the open data for general purpose use.

Public/External APIs are generally available for specific purposes or as per business requirement. Unlike open APIs they need some authorization to validate the request as these APIs are public but require some authorization. There are few ways to authorize the consumer who is trying to consume these APIs.

AUTH-TOKEN - We generally use this method when we build some apps to solve business problems. AuthTokens are generally JWT tokens which are created and given to the client when he logs into the system. This token ensures the client’s valid identity and also ensures the expiry of the user.

This token is also used for role based authorization in the system. This token is attached with the request header whenever the client is trying to make a request to the server for any particular resources. So at the server side this token can be validated for the authenticity and roles against the requested resource. If this token meets the validation checks the resource will be served or returned to the user, else server returns 401 or 403 as per the business logic.

API-KEY - This method is the same as AUTH-TOKEN as it is also a kind of token or hashed string used for authentication of the consumer. This also needs to be attached with the request either in query params or headers.

This method is mainly used when we are trying to consume some 3rd party data provider or any subscription based data consumption. This API-KEY is provided upon registering on the data provider server or subscribing to any service.

Private/Internal APIs are usually developed for internal purposes and aren’t exposed for public consumption. They are mainly used among services mainly in microservice architecture and consumed by any of the micro-service we have in the system. Even here, we should use some kind of internal authorization among these services despite being private APIs.

The reason is as we follow Stateless REST architecture so here the micro-services will work as dummy services meaning - they don’t even know what is happening in the system as no state is being maintained. They will just take the request and return the data despite knowing the state of any request. Also, any unauthorised access can also be made and unauthorised action can happen. To handle this scenario we should have some kind of internal token to be used among these services to ensure the authenticity of requests even for the internal consumption. Like - twilio, weatherapi

Following the best practises brings about a greater deal of sense of security into the architecture. However, the story does not end here. Since the technology and ecosystem is dynamic, it is important to update your approach from time to time. Over the time, with too many innovative heads working together, systems and approaches become vulnerable and obsolete. This is something a developer should always look out for.

Our Services

Customer Experience Management

- Content Management

- Marketing Automation

- Mobile Application Development

- Drupal Support and Maintanence

Enterprise Modernization, Platforms & Cloud

- Modernization Strategy

- API Management & Developer Portals

- Hybrid Cloud & Cloud Native Platforms

- Site Reliability Engineering